Submitted by Diane L. Lister on Wed, 09/07/2025 - 10:56

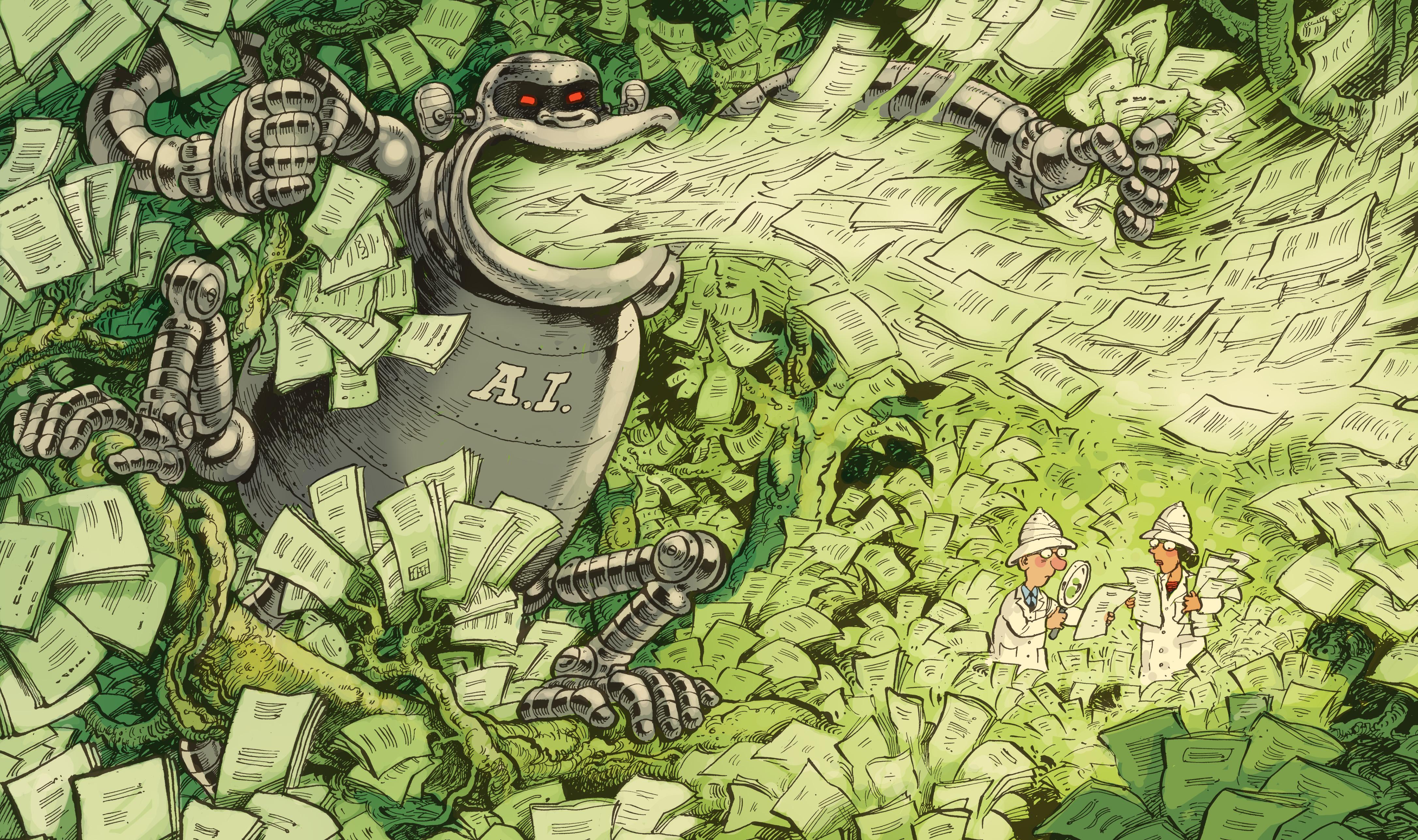

The accelerating rise of AI-generated fraudulent academic papers is fuelling an existential crisis for evidence synthesis. But AI also presents hope – both in speeding up literature searches, and in detecting these very same phony papers. In a comment piece published in Nature yesterday we suggest a way to safeguard evidence synthesis against the rising tide of "poisoned literature", and ensure the integrity of scientific discovery.

Current methods for rigorous systematic literature review are expensive and slow. Authors are already struggling to keep up with the rapidly expanding number of legitimate papers.

On top of this, the number of paper retractions is increasing near exponentially, and already systematic reviews unknowingly cite retracted papers, with most remaining uncorrected even a year after notification.

Large Language Models (LLMs) are poised to flood the scientific landscape with convincing, fake manuscripts and doctored data, potentially overwhelming our current ability to distinguish fact from fiction.

This March, the AI Scientist (an AI tool developed by the company Sakana AI in Tokyo and its collaborators) formulated hypotheses, designed and ran experiments, analysed the results, generated the figures and produced a manuscript that passed peer review for a workshop at a leading machine learning conference.

Creating a ’living oracle’ of scientific knowledge

But there is hope! A revolutionary network approach, combining AI-powered, subject-wide evidence synthesis with human oversight, and a decentralized network of living evidence databases could harness the best of AI. This dynamic system would continuously gather, screen, and index literature, automatically removing compromised studies and recalculating results, creating a robust and transparent "living oracle" of scientific knowledge.

Read the full article in Nature: (https://www.nature.com/articles/d41586-025-02069-w)

Photo credit: David Parkins